In this post I introduce some of the core capabilities of Azure Synapse Analytics and when they are used. I present from the perspective of data engineer but it should be easy to translate what is most useful for analysts and data scientists also. Please continue reading for a quick walkthrough of the capabilities and if you’d like to see each section of the portal in action you can also watch this YouTube playlist I created.

What Is Azure Synapse Analytics?

Azure Synapse is a collection of analytics services integrated into one environment. It covers quite a bit of your needs for data lake, data warehouse, reporting, analytics, and machine learning workloads. Some of the additional services you depend on such as Power BI, Azure Data Lake Storage, and Azure Key Vault are tightly integrated as linked services. All the built-in capabilities have ways to scale and pause (usually through auto-terminate settings) so that you limit the amount you pay for resources that are not actively used. Let’s break down where I see each of the main capabilities being useful.

Serverless Apache Spark is great for data processing and exploration. I often use this for the data pipelines which ingest and transform data. Certain power users of your analytics platform may also use this for exploration, feature processing, and scoring data.

Serverless SQL Pools provide easy querying, exploration, and data extraction. This works well if data is already in a table or a structured format in your data lake. You can use it from many tools that work with SQL syntax. Users who are experienced connecting to databases using SQL Server Management Studio, Azure Data Studio, or a reporting tool can easily switch over to querying your data lake with Synapse Serverless SQL.

Dedicated SQL Pools are for high-performance analytic queries. It is a Massive Parallel Processing (MPP) system with separate storage and compute that is optimized to work together and scale to very large volumes. As with any MPP system, large tables are distributed and queried in parallel while smaller dimension tables can be replicated for easy joins from the larger tables. This adds some complexity when designing your tables but the Microsoft Learn path has solid guidance to help you understand the options and tradeoffs. Dedicated SQL is the part of Azure Synapse that you are most likely to compare to Snowflake, Redshift, Vertica, Teradata, and others. It is not cheap so consider your budget and analyze costs carefully to determine the right pool size.

Synapse Pipelines is a no-code data ingestion tool which means you develop the pipelines with a web user interface. It has many activities that you can choose from and configure to build out your pipelines. It also has templates you can find to help you get your framework built. I prefer to use this for orchestrating Spark scripts and serverless SQL tasks for your data processing but that is mostly a matter of personal preference. Several years ago I wrote about why I like code over ETL tools for building data pipelines if you want to read my perspective on it.

Azure Data Explorer is a newer offering so I do not have real use cases for leveraging it but it is best suited for log data and time-series data. It has the advantage of easily loading large amounts of data but once loaded you should not expect to update those records.

Data Hub

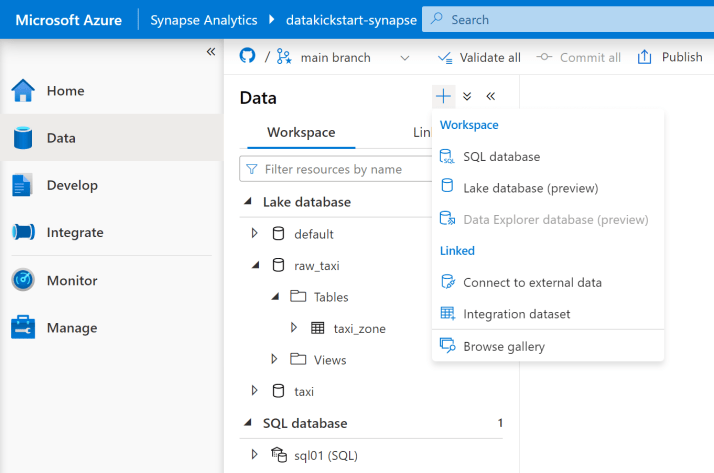

The data hub is where you go to browse your databases and linked storage accounts that make up your data lake. The “Lake database” section is where databases created from Synapse Spark show up and you can query those using Spark or Serverless SQL. In the “SQL database” section you will see your Serverless SQL and Dedicated SQL databases that you can query with the respective pool. A common question is if it is possible to query the Serverless SQL tables from Dedicated SQL and vice versa. The answer is no, however you can use Polybase in Dedicated SQL to set up external tables against the same underlying files in the data lake.

Another component of the data hub is the linked storage accounts which show up under Azure Blob Storage and Azure Data Lake Storage Gen2. You can also click the + icon and select Browse Gallery to find sample datasets or other sample resources. Your Integration Datasets which are used in Synapse Pipelines are also available here but I don’t often find value in viewing those.

Develop Hub

The develop hub holds the SQL Scripts and Notebooks that have been developed. SQL Scripts can query either the serverless Built-in pool or a dedicated SQL pool that was created in your workspace. This is often a place to save and share analysis and data exploration scripts. You can also save your scripts that create databases, tables, and users. You could reference these scripts from a deploy process but I often manage them separately or build them into a pipeline.

The Notebooks within the develop hub are for Apache Spark scripts. Apache Spark is a distributed computing framework that can run in several cluster modes with an API that can be used by several languages. For Azure Synapse Spark, you have the option to use Python, Scala, C#, or Spark SQL. You choose a primary language for your notebook but can have different cells in the notebook run in another language. The variables and objects you create in a Pyspark (Python) cell are not available for a Spark (Scala) cell. However, you can save data as a temporary table to quickly read it from another language cell in the same notebook. For a deeper dive into Synapse Apache Spark, you can watch one of my earlier Spark videos where I walk through an example in Python, Scala, and C# notebooks.

Integrate Hub

The integrate hub is where you find the Synapse Pipelines capability which is almost identical to Azure Data Factory. The main way I use this is for orchestration, which just means to call code executions and set the dependencies which define what must complete before the next code runs. The related video I created has more detail for you but there are many other resources if you want to learn about all the different “Activities” you can choose from when working with Azure Synapse Pipelines. My main use of pipelines, other than running Spark notebooks, is to define a Copy Activity which can easily copy tables from one data system to another. I still prefer Spark notebooks most of the time, but if copying from a source where I need to use a specific IP or be part of a network, then using a Copy Activity with a self-hosted integration runtime is my easiest option to configure. When the pipeline is ready to schedule, you can add a trigger to kick it off on a certain schedule or based on an event.

Monitor Hub

The monitor hub is the place to find details about code execution and resource usage within the Azure Synapse workspace. As a data engineer, I use the Apache Spark applications and the Pipeline runs sections often. I also go here to check what Spark pool resources and SQL pools are currently running so I can stop any that are not required.

Manage Hub

The manage hub is where you can create and manage (of course) the resources used in the Synapse workspace. SQL pools and Apache Spark pools are used often and are hopefully self-explanatory. Access control is important since Synapse users need to be added to a certain role to do anything. The workspace access permissions are not enough to allow users to be productive within the Synapse workspace. The access control list is the key to permissions.

Additional things you may use the manage hub for are:

- Linked Services to set up connections to Azure services that need to be integrated into the workspace.

- Git configuration to set up a code repository.

- Workspace packages for adding additional libraries to Apache Spark pools.

- Triggers to schedule pipelines to run.

Wrap up

In this post, I walked you through some of the most useful things in each section of the Azure Synapse Analytics workspace. The goal is to get you oriented to what is available and when you are likely to use each capability. I expect the videos I made on Synapse will help most learners get an introduction to the environment, but I may add more written tutorials in the future for those who prefer reading over videos.